# -.- coding: utf-8 -.-import osimport argparseimport gymimport numpy as npfrom itertools import countimport torchimport torch. Nn as nnimport torch. Functional as Fimport torch. Optim as optimfrom torch.

Autograd import Variablefrom torch. Distributions import Categoricalparser = argparse. ArgumentParser( description = 'PyTorch policy gradient example at openai-gym pong')parser. Addargument( '-gamma', type = float, default = 0.99, metavar = 'G',help = 'discount factor (default: 0.99')parser.

Play Neon Blast Pong! Online for Free - POG.COM Neon Blast Pong! Is an online Table tennis game for kids. It uses the Flash technology. Play this Neon game now or enjoy the many other related games we have at POG. Neon Pong Free Blob Pong (Android) Neon Pong Free Blob Pong adalah game gratis yang dapat dimainkan single player ataupun multiplayer. Game ini dibikin dan diterbitkan oleh Coffee Belt Games. Ini adalah permainan Pong classic yang ada untuk dimainkan di platform Android saja. Permainan ini memberi layanan bermain serupa seperti permainan Pong.

Addargument( '-decayrate', type = float, default = 0.99, metavar = 'G',help = 'decay rate for RMSprop (default: 0.99)')parser. Addargument( '-learningrate', type = float, default = 1e-4, metavar = 'G',help = 'learning rate (default: 1e-4)')parser.

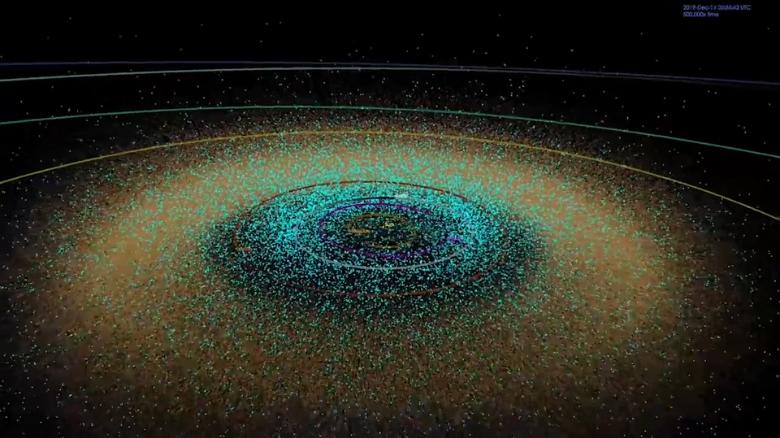

Near-Earth asteroids are divided into groups based on their semi-major axis (a), perihelion distance (q), and aphelion distance (Q): The Atiras or Apoheles have orbits strictly inside Earth's orbit: an Atira asteroid's aphelion distance (Q) is smaller than Earth's perihelion distance (0.983 AU). Average distance between Earth and the moon is about 239,000 miles (385,000 kilometers). The Asteroid Watch Widget tracks asteroids and comets that will make relatively close approaches to Earth. The Widget displays the date of closest approach, approximate object diameter, relative size and distance from Earth for each encounter.  The small near-Earth asteroids 2008 TC 3, 2014 AA, 2018 LA and 2019 MO are the only four asteroids discovered before impacting into Earth (see asteroid impact prediction). Scientists estimate that several dozen asteroids in the 6–12 m (20–39 ft) size range fly by Earth at a distance closer than the moon every year, but only a fraction of these are actually detected. Here are the ten biggest asteroids set to approach the Earth in 2020: The biggest asteroid to fly past the planet in 2020 will be the massive four-kilometre wide 52768 (1998 OR2) at 31,320. Near-Earth asteroids (NEAs) are asteroids whose orbits are close to Earth's orbit. Some NEAs' orbits intersect Earth's so they pose a collision danger. On the other hand, NEAs are most easily accessible for spacecraft from Earth; in fact, some can be reached with much less delta-v than it takes to reach the Moon.

The small near-Earth asteroids 2008 TC 3, 2014 AA, 2018 LA and 2019 MO are the only four asteroids discovered before impacting into Earth (see asteroid impact prediction). Scientists estimate that several dozen asteroids in the 6–12 m (20–39 ft) size range fly by Earth at a distance closer than the moon every year, but only a fraction of these are actually detected. Here are the ten biggest asteroids set to approach the Earth in 2020: The biggest asteroid to fly past the planet in 2020 will be the massive four-kilometre wide 52768 (1998 OR2) at 31,320. Near-Earth asteroids (NEAs) are asteroids whose orbits are close to Earth's orbit. Some NEAs' orbits intersect Earth's so they pose a collision danger. On the other hand, NEAs are most easily accessible for spacecraft from Earth; in fact, some can be reached with much less delta-v than it takes to reach the Moon.

Addargument( '-batchsize', type = int, default = 10, metavar = 'G',help = 'Every how many episodes to da a param update')parser. Addargument( '-seed', type = int, default = 87, metavar = 'N',help = 'random seed (default: 87)')args = parser. Parseargsenv = gym. Fantasy general. Make( 'Pong-v0')env.

Manualseed( args. Seed)def prepro( I):' prepro 210x160x3 into 6400 'I = I 35: 195I = I:: 2,:: 2, 0I I 144 = 0I I 109 = 0I I!= 0 = 1return I. Ravelclass Policy( nn. Module):def init( self):super( Policy, self).

Affine1 = nn. Linear( 6400, 200)self. Affine2 = nn. Linear( 200, 3) # action 1 = 不動, action 2 = 向上, action 3 = 向下self. Savedlogprobs = self. Rewards = def forward( self, x):x = F.

Affine1( x))actionscores = self. Affine2( x)return F. Softmax( actionscores, dim = 1)# built policy networkpolicy = Policy# check & load pretrain modelif os. Isfile( 'pgparams.pkl'):print( 'Load Policy Network parametets.' Loadstatedict( torch. Load( 'pgparams.pkl'))# construct a optimal functionoptimizer = optim. RMSprop( policy.

Parameters, lr = args. Learningrate, weightdecay = args. Decayrate)def selectaction( state):state = torch. Fromnumpy( state).

Unsqueeze( 0)probs = policy( Variable( state))m = Categorical( probs)action = m. Sample # 從multinomial分佈中抽樣policy. Logprob( action)) # 蒐集log action以利於backwardreturn action. Data 0def finishepisode:R = 0policyloss = rewards = for r in policy. Rewards:: - 1:R = r + args. Gamma.

Rrewards. Insert( 0, R)# turn rewards to pytorch tensor and standardizerewards = torch. Tensor( rewards)rewards = ( rewards - rewards. Mean) / ( rewards. Eps)for logprob, reward in zip( policy. Savedlogprobs, rewards):policyloss.

Append( - logprob. reward)# 清理optimizer的gradient是PyTorch制式動作,去他們官網學習一下即可optimizer. Zerogradpolicyloss = torch.

Cat( policyloss). Step# clean rewards and savedactionsdel policy. Rewards:del policy. Savedlogprobs:# Main looprunningreward = Nonerewardsum = 0for iepisode in count( 1):state = env. Resetfor t in range( 10000):state = prepro( state)action = selectaction( state)# 因為神經網路的output為0, 1, 2# 根據gym的設定: action 1 = 不動, action 2 = 向上, action 3 = 向下# 於是我將action + 1action = action + 1state, reward, done= env.

Step( action)rewardsum += rewardpolicy. Append( reward)if done:# tracking logrunningreward = rewardsum if runningreward is None else runningreward.

0.99 + rewardsum. 0.01print( 'resetting env.

Episode reward total was%f. Running mean:%f'% ( rewardsum, runningreward))rewardsum = 0breakif reward!= 0:print( 'ep%d: game finished, reward:%f'% ( iepisode, reward) + ( ' if reward - 1 else '!!!!!!!' ))# use policy gradient update model weightsif iepisode% args. Batchsize 0:print( 'ep%d: policy network parameters updating.' % ( iepisode))finishepisode# Save model in every 50 episodeif iepisode% 50 0:print( 'ep%d: model saving.'

% ( iepisode))torch. Save( policy. Statedict, 'pgparams.pkl')# Reference# 1. Karpathy pg-pong.py: 2. PyTorch official example: https://github.com/pytorch/examples/blob/master/reinforcementlearning/reinforce.py.